Page 2 :

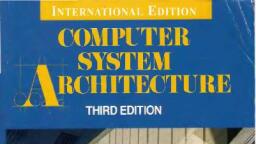

MALLA REDDY COLLEGE OF ENGINEERING & TECHNOLOGY, DEPARTMENT OF INFORMATION TECHNOLOGY, (R17A0510) COMPUTER ORGANIZATION, OBJECTIVES:, • To understand basic components of system, • To explore the memory organization, • To explore I/O organization in depth, • Ability to analyze the hardware and software issues related to computers and the interface between, the two., UNIT I :, BASIC STRUCTURE OF COMPUTERS: Computer Types, Functional unit, Basic OPERATIONAL concepts, Bus, structures, Software, Performance, multiprocessors and multi computers. Data Representation. Fixed Point, Representation. Floating – Point Representation. Error Detection codes., REGISTER TRANSFER LANGUAGE AND MICRO OPERATIONS : Register Transfer language. Register Transfer Bus, and memory transfers, Arithmetic Micro operations, logic micro operations, shift micro operations, Arithmetic, logic shift unit., UNIT-II:, Basic Computer Organization and Design:, Instruction codes. Computer Registers Computer instructions, Timing and Control, Instruction cycle. Memory, Reference Instructions, Input – Output and Interrupt, Complete Computer Description., Micro Programmed Control: Control memory, Address sequencing, micro program example, design of control, unit, micro Programmed control, UNIT-III:, Computer Processing Unit Organization: General Register Organization ,STACK organization, Instruction, Formats, Addressing modes, Data Transfer and Manipulation ,Program Control. CISC and RISC. Computer, Arithmetic: Addition and subtraction, multiplication Algorithms, Division Algorithms, Floating – point, Arithmetic operations. BCD Adder, UNIT-IV:, Input-Output Organization: Peripheral Devices, Input-Output Interface, Asynchronous data transfer Modes of, Transfer, Priority Interrupt Direct memory Access, Input –Output Processor (IOP), Pipeline And Vector Processing: Parallel Processing, Pipelining, Arithmetic Pipeline, Instruction Pipeline,, Dependencies, Vector Processing., UNIT-V:, Memory Organization: Memory Hierarchy, Main Memory –RAM And ROM Chips, Memory Address map,, Auxiliary memory-magnetic Disks, Magnetic tapes, Associate Memory,-Hardware Organization, Match Logic,, Cache Memory –Associative Mapping , Direct Mapping, Set associative mapping ,Writing in to cache and cache, Initialization , Cache Coherence ,Virtual memory-Address Space and memory Space ,Address mapping using, pages, Associative memory page table ,page Replacement ., TEXT BOOKS:, 1. “Computer Organization” special edition-MRCET,TATA McGraw Hill. 2017, 2. Computer Organization – Carl Hamacher, Zvonks Vranesic, SafeaZaky, Vth Edition, McGraw Hill., 3. Computer Systems Architecture – M.Moris Mano, IIIrd Edition, Pearson/PHI, REFERENCES:, 1. Computer Organization and Architecture – William Stallings Sixth Edition, Pearson/PHI, 2. Structured Computer Organization – Andrew S. Tanenbaum, 4th Edition PHI/Pearson, 3. Fundamentals or Computer Organization and Design, - Sivaraama Dandamudi Springer Int. Edition., 4. Computer Architecture a quantitative approach, John L. Hennessy and David A. Patterson, Fourth, Edition Elsevier, 5. Computer Architecture: Fundamentals and principles of Computer Design, Joseph D. Dumas II, BS, Publication.

Page 3 :

MALLA REDDY COLLEGE OF ENGINEERING & TECHNOLOGY, DEPARTMENT OF INFORMATION TECHNOLOGY, , INDEX, S. No, , Topic, , Unit, , Page no, , 1, , I, , Computer Types, , 1, , 2, , I, , Functional unit, , 4, , 3, , I, , Basic OPERATIONAL concepts, , 6, , 4, , I, , Bus structures, , 8, , 5, , I, , Software, Performance, , 9, , 6, , I, , multiprocessors and multi computers, , 11, , 7, , I, , Data Representation, , 11, , 8, , I, , Error Detection codes., , 12, , 9, , I, , Register Transfer language, , 13, , 10, , II, , 11, 12, 13, 14, 15, 16, 17, , II, II, II, II, II, III, III, , Basic Computer Organization and Design:, , Instruction codes. Computer Registers, , 29, , Memory reference instructions, , 40, , Instruction cycle, , 41, , Micro Programmed Control, , 42, , Address sequencing, , 43, , Computer Processing Unit Organization, General Register Organization, , 45

Page 4 :

18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, , III, III, III, III, , STACK organization, Instruction Formats, , 46, , Addressing modes, , 50, , Data Transfer and Manipulation ,Program Control, , 55, , Computer airthmatic, , 60, , III, , Multiplication Algorithm, III, Division algorithm, , IV, IV, IV, IV, IV, IV, IV, IV, IV, V, V, V, V, V, V, , 63, 63, , Input-Output Organization, Peripheral Devices, Input-Output Interface, , 76, , Asynchronous data transfer Modes of Transfer, , 77, , Priority Interrupt Direct memory Access, , 82, , Input –Output Processor (IOP), , 84, , Pipeline And Vector Processing, Parallel Processing, Pipelining, , 85, , Arithmetic Pipeline, Instruction Pipeline, , 86, , Dependencies, Vector Processing, , 87, , Memory Organization, , Memory Hierarchy, Main Memory –RAM And ROM Chips, , 89, , Memory Address map, Auxiliary memory-magnetic Disks, , 91, , Match Logic, Cache Memory –Associative Mapping , Direct, Mapping, , 92, , Set associative mapping ,Writing in to cache and cache, Initialization, , 93, , Cache Coherence ,Virtual memory-Address Space and memory, Space, , 94

Page 5 :

V, V, , Address mapping using pages, Associative memory page table, , 95, , page Replacement, , 96

Page 6 :

Computer Organization, , [IT II – I ], UNIT I, , Basic Structure Of Computers: Computer Types, Functional unit, Basic OPERATIONAL concepts,, Bus structures, Software, Performance, multiprocessors and multi computers., Data Representation: Fixed Point Representation. Floating – Point Representation. Error Detection, codes., Register Transfer Language And Micro Operations: Register Transfer language. Register, Transfer Bus and memory transfers, Arithmetic Micro operations, logic micro operations, shift micro, operations, Arithmetic logic shift unit., , Basic Structure of Computers, Computer Architecture in general covers three aspects of computer design namely: Computer, Hardware, Instruction set Architecture and Computer Organization., Computer hardware consists of electronic circuits, displays, magnetic and optical storage, media and communication facilities., Instruction set Architecture is programmer visible machine interface such as instruction set,, registers, memory organization and exception handling. Two main approaches are mainly, CISC (Complex Instruction Set Computer) and RISC (Reduced Instruction Set Computer), Computer Organization includes the high level aspects of a design, such as memory system, the, bus structure and the design of the internal CPU., , Computer Types, Computer is a fast electronic calculating machine which accepts digital input, processes it, according to the internally stored instructions (Programs) and produces the result on the, output device. The internal operation of the computer can be as depicted in the figure below:, , Figure 1: Fetch, Decode and Execute steps in a Computer System, , Computer Organization, , Page 1

Page 7 :

Computer Organization, , [IT II – I ], , The computers can be classified into various categories as given below:, , , , , , , , , Micro Computer, Laptop Computer, Work Station, Super Computer, Main Frame, Hand Held, Multi core, , Micro Computer: A personal computer; designed to meet the computer needs of an, individual. Provides access to a wide variety of computing applications, such as word, processing, photo editing, e-mail, and internet., Laptop Computer: A portable, compact computer that can run on power supply or a battery, unit. All components are integrated as one compact unit. It is generally more expensive than a, comparable desktop. It is also called a Notebook., Work Station: Powerful desktop computer designed for specialized tasks. Generally used for, tasks that requires a lot of processing speed. Can also be an ordinary personal computer, attached to a LAN (local area network)., Super Computer: A computer that is considered to be fastest in the world. Used to execute, tasks that would take lot of time for other computers. For Ex: Modeling weather systems,, genome sequence, etc (Refer site: http://www.top500.org/), Main Frame: Large expensive computer capable of simultaneously processing data for, hundreds or thousands of users. Used to store, manage, and process large amounts of data that, need to be reliable, secure, and centralized., Hand Held: It is also called a PDA (Personal Digital Assistant). A computer that fits into a, pocket, runs on batteries, and is used while holding the unit in your hand. Typically used as, an appointment book, address book, calculator and notepad., Multi Core: Have Multiple Cores – parallel computing platforms. Many Cores or computing, elements in a single chip. Typical Examples: Sony Play station, Core 2 Duo, i3, i7 etc., , GENERATION OF COMPUTERS, Development of technologies used to fabricate the processors, memories and I/O units of, the computers has been divided into various generations as given below:, First generation, Second generation, Third generation, Fourth generation, Beyond the fourth generation, , Computer Organization, , Page 2

Page 8 :

Computer Organization, , [IT II – I ], , First generation:, 1946 to 1955: Computers of this generation used Vacuum Tubes. The computes were built using, stored program concept. Ex: ENIAC, EDSAC, IBM 701., Computers of this age typically used about ten thousand vacuum tubes. They were bulky in, size had slow operating speed, short life time and limited programming facilities., Second generation:, 1955 to 1965: Computers of this generation used the germanium transistors as the active, switching electronic device. Ex: IBM 7000, B5000, IBM 1401. Comparatively smaller in, size About ten times faster operating speed as compared to first generation vacuum tube, based computers. Consumed less power, had fairly good reliability. Availability of large, memory was an added advantage., Third generation:, 1965 to 1975: The computers of this generation used the Integrated Circuits as the active, electronic components. Ex: IBM system 360, PDP minicomputer etc. They were still smaller, in size. They had powerful CPUs with the capacity of executing 1 million instructions per, second (MIPS). Used to consume very less power consumption., Fourth generation:, 1976 to 1990: The computers of this generation used the LSI chips like microprocessor as, their active electronic element. HCL horizen III, and WIPRO‟S Uniplus+ HCL‟s Busybee, PC etc., They used high speed microprocessor as CPU. They were more user friendly and highly reliable, systems. They had large storage capacity disk memories., Beyond Fourth Generation:, 1990 onwards: Specialized and dedicated VLSI chips are used to control specific functions, of these computers. Modern Desktop PC‟s, Laptops or Notebook Computers., , Computer Organization, , Page 3

Page 9 :

Computer Organization, , [IT II – I ], , Functional Unit, A computer in its simplest form comprises five functional units namely input unit, output unit, memory unit, arithmetic & logic unit and control unit. Figure 2 depicts the functional units of, a computer system., , Figure 2: Basic functional units of a computer, , Let us discuss about each of them in brief:, 1. Input Unit: Computer accepts encoded information through input unit. The, standard input device is a keyboard. Whenever a key is pressed, keyboard, controller sends the code to CPU/Memory., Examples include Mouse, Joystick, Tracker ball, Light pen, Digitizer, Scanner etc., 2. Memory Unit: Memory unit stores the program instructions (Code), data, and results of computations etc. Memory unit is classified as:, , , Primary /Main Memory, , , , Secondary /Auxiliary Memory, , Computer Organization, , Page 4

Page 10 :

Computer Organization, , [IT II – I ], , Primary memory is a semiconductor memory that provides access at high speed., Run time program instructions and operands are stored in the main memory. Main, memory is classified again as ROM and RAM. ROM holds system programs and, firmware routines such as BIOS, POST, I/O Drivers that are essential to manage the, hardware of a computer. RAM is termed as Read/Write memory or user memory that, holds run time program instruction and data. While primary storage is essential, it is, volatile in nature and expensive. Additional requirement of memory could be supplied, as auxiliary memory at cheaper cost. Secondary memories are non volatile in nature., 3. Arithmetic and logic unit: ALU consist of necessary logic circuits like adder,, comparator etc., to perform operations of addition, multiplication, comparison of two, numbers etc., 4. Output Unit: Computer after computation returns the computed results, error, messages, etc. via output unit. The standard output device is a video monitor,, LCD/TFT monitor. Other output devices are printers, plotters etc., 5. Control Unit: Control unit co-ordinates activities of all units by issuing control, signals. Control signals issued by control unit govern the data transfers and then, appropriate operations take place. Control unit interprets or decides the, operation/action to be performed., The operations of a computer can be summarized as follows:, 1. A set of instructions called a program reside in the main memory of computer., 2. The CPU fetches those instructions sequentially one-by-one from the main memory,, decodes them and performs the specified operation on associated data operands in, ALU., 3. Processed data and results will be displayed on an output unit., 4. All activities pertaining to processing and data movement inside the computer, machine are governed by control unit., , Computer Organization, , Page 5

Page 11 :

Computer Organization, , [IT II – I ], , Basic Operational Concepts, An Instruction consists of two parts, an Operation code and operand/s as shown below:, OPCODE, Let us see a typical instruction, , OPERAND/s, , ADD LOCA, R0, , This instruction is an addition operation. The following are the steps to execute the, instruction: Step 1: Fetch the instruction from main memory into the processor, Step 2: Fetch the operand at location LOCA from main memory into the processor, Step 3: Add the memory operand (i.e. fetched contents of LOCA) to the contents of register, R0 Step 4: Store the result (sum) in R0., The same instruction can be realized using two instructions as, Load LOCA,, R1 Add R1,, R0, The steps to execute the instructions can be enumerated as below:, Step 1: Fetch the instruction from main memory into the, processor Step 2: Fetch the operand at location LOCA from main, memory into, the processor Register R1, Step 3: Add the content of Register R1 and the contents of register, R0 Step 4: Store the result (sum) in R0., , Computer Organization, , Page 6

Page 12 :

Computer Organization, , [IT II – I ], , Figure 3 below shows how the memory and the processor are connected. As shown in the, diagram, in addition to the ALU and the control circuitry, the processor contains a number of, registers used for several different purposes. The instruction register holds the instruction that, is currently being executed. The program counter keeps track of the execution of the program., It contains the memory address of the next instruction to be fetched and executed. There are n, general purpose registers R0 to Rn-1 which can be used by the programmers during writing, programs., , Figure 3: Connections between the processor and the memory, , The interaction between the processor and the memory and the direction of flow of, information is as shown in the diagram below:, , Figure 4: Interaction between the memory and the ALU, , Computer Organization, , Page 7

Page 13 :

Computer Organization, , [IT II – I ], , BUS STRUCTURES, Group of lines that serve as connecting path for several devices is called a bus (one bit per, line). Individual parts must communicate over a communication line or path for exchanging, data, address and control information as shown in the diagram below. Printer example –, processor to printer. A common approach is to use the concept of buffer registers to hold the, content during the transfer., , Figure 5: Single bus structure, , SOFTWARE, If a user wants to enter and run an application program, he/she needs a System Software., System Software is a collection of programs that are executed as needed to perform functions, such as:, Receiving and interpreting user commands, Entering and editing application programs and storing then as files in secondary, storage devices, Running standard application programs such as word processors, spread sheets,, games etc…, Operating system - is key system software component which helps the user to exploit the, below underlying hardware with the programs., , , , USER PROGRAM and OS ROUTINE INTERACTION, Let‟s assume computer with 1 processor, 1 disk and 1 printer and application program is in, machine code on disk. The various tasks are performed in a coordinated fashion, which is, called multitasking. t0, t1 …t5 are the instances of time and the interaction during various, instances as given below:, t0: the OS loads the program from the disk to, memory t1: program executes, t2: program accesses disk, t3: program executes some, more t4: program accesses, printer, t5: program terminates, Computer Organization, , Page 8

Page 14 :

Computer Organization, , [IT II – I ], , Figure 6 :User program and OS routine sharing of the, processor, , PERFORMANCE, , The most important measure of the performance of a computer is how quickly it can, execute programs. The speed with which a computer executes program is affected by, the design of its hardware. For best performance, it is necessary to design the compiles,, the machine instruction set, and the hardware in a coordinated way., The total time required to execute the program is elapsed time is a measure of the, performance of the entire computer system. It is affected by the speed of the processor,, the disk and the printer. The time needed to execute a instruction is called the processor, time., Just as the elapsed time for the execution of a program depends on all units in a, computer system, the processor time depends on the hardware involved in the execution, of individual machine instructions. This hardware comprises the processor and the, memory which are usually connected by the bus., The pertinent parts of the fig. c is repeated in fig. d which includes the cache memory as, part of the processor unit., Let us examine the flow of program instructions and data between the memory and the, processor. At the start of execution, all program instructions and the required data are, stored in the main memory. As the execution proceeds, instructions are fetched one by, one over the bus into the processor, and a copy is placed in the cache later if the same, instruction or data item is needed a second time, it is read directly from the cache., The processor and relatively small cache memory can be fabricated on a single IC chip., The internal speed of performing the basic steps of instruction processing on chip is, very high and is considerably faster than the speed at which the instruction and data can, be fetched from the main memory. A program will be executed faster if the movement, of instructions and data between the main memory and the processor is minimized,, which is achieved by using the cache., Computer Organization, , Page 9

Page 15 :

Computer Organization, , [IT II – I ], , For example:- Suppose a number of instructions are executed repeatedly over a short, period of time as happens in a program loop. If these instructions are available in the, cache, they can be fetched quickly during the period of repeated use. The same applies, to the data that are used repeatedly., , Processor clock:, Processor circuits are controlled by a timing signal called clock. The clock designer the, regular time intervals called clock cycles. To execute a machine instruction the, processor divides the action to be performed into a sequence of basic steps that each step, can be completed in one clock cycle. The length P of one clock cycle is an important, parameter that affects the processor performance., Processor used in today‟s personal computer and work station have a clock rates that, range from a few hundred million to over a billion cycles per second., , Basic performance equation:, We now focus our attention on the processor time component of the total elapsed time., Let „T‟ be the processor time required to execute a program that has been prepared, in some high-level language. The compiler generates a machine language object, program that corresponds to the source program. Assume that complete execution of the, program requires the execution of N machine cycle language instructions. The number, N is the actual number of instruction execution and is not necessarily equal to the, number of machine cycle instructions in the object program. Some instruction may be, executed more than once, which in the case for instructions inside a program loop others, may not be executed all, depending on the input data used., Suppose that the average number of basic steps needed to execute one machine, cycle instruction is S, where each basic step is completed in one clock cycle. If clock, rate is „R‟ cycles per second, the program execution time is given by, T=N*S/R, this is often referred to as the basic performance equation., We must emphasize that N, S & R are not independent parameters changing one may, affect another. Introducing a new feature in the design of a processor will lead to, improved performance only if the overall result is to reduce the value of T., , Performance measurements:, It is very important to be able to access the performance of a computer, comp designers, use performance estimates to evaluate the effectiveness of new features., The previous argument suggests that the performance of a computer is given by the, execution time T, for the program of interest., Inspite of the performance equation being so simple, the evaluation of „T‟ is highly, complex. Moreover the parameters like the clock speed and various architectural, features are not reliable indicators of the expected performance., Hence measurement of computer performance using bench mark programs is done to, make comparisons possible, standardized programs must be used., Computer Organization, , Page 10

Page 16 :

Computer Organization, , [IT II – I ], , The performance measure is the time taken by the computer to execute a given bench, mark. Initially some attempts were made to create artificial programs that could be used, as bench mark programs. But synthetic programs do not properly predict the, performance obtained when real application programs are run., A non profit organization called SPEC- system performance evaluation corporation, selects and publishes bench marks., The program selected range from game playing, compiler, and data base applications to, numerically intensive programs in astrophysics and quantum chemistry. In each case,, the program is compiled under test, and the running time on a real computer is, measured. The same program is also compiled and run on one computer selected as, reference., The „SPEC‟ rating is computed as follows., Running time on the reference computer, SPEC rating = --------------------------------------------------Running time on the computer under test, If the SPEC rating = 50, , Multiprocessor & microprocessors:, Large computers that contain a number of processor units are called multiprocessor, system. These systems either execute a number of different application tasks in parallel, or execute subtasks of a single large task in parallel. All processors usually have access, to all memory locations in such system & hence they are called shared memory, multiprocessor systems. The high performance of these systems comes with much, increased complexity and cost. In contrast to multiprocessor systems, it is also possible, to use an interconnected group of complete computers to achieve high total, computational power. These computers normally have access to their own memory units, when the tasks they are executing need to communicate data they do so by exchanging, messages over a communication network. This properly distinguishes them from shared, memory multiprocessors, leading to name message-passing multi computer., , Data Representation:, Information that a Computer is dealing with, , Data, Numeric Data, Numbers( Integer, real), , Non-numeric Data, Letters, Symbols, , Relationship between data elements, Data Structures, Linear Lists, Trees, Rings, etc, , Program(Instruction), , Numeric Data Representation, , Decimal, Computer Organization, , Binary Octal, , Hexadecimal, Page 11

Page 17 :

Computer Organization, , Fixed, Point, , 00, 01, 02, 03, 04, 05, 06, 07, 08, 09, 10, 11, 12, 13, 14, 15, , 0000, 0001, 0010, 0011, 0100, 0101, 0110, 0111, 1000, 1001, 1010, 1011, 1100, 1101, 1110, 1111, , [IT II – I ], , 00, 01, 02, 03, 04, 05, 06, 07, 10, 11, 12, 13, 14, 15, 16, 17, , 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, F, , Representation:, It‟s the representation for integers only where the decimal point is always fixed. i.e at, the end of rightmost point. it can be again represented in two ways., 1. Sign and Magnitude Representation, In this system, he most significant (leftmost) bit in the word as a sign bit. If the sign bit, is 0, the number is positive; if the sign bit is 1, the number is negative., The simplest form of representing sign bit is the sign magnitude representation., One of the draw back for sign magnitude number is addition and subtraction need to, consider both sign of the numbers and their relative magnitude., Another drawback is there are two representation for 0(Zero) i.e +0 and -0., 2. One’s Complement (1’s) Representation, In this representation negative values are obtained by complementing each bit of the, corresponding positive number., For example 1s complement of 0101 is 1010 . The process of forming the 1s, complement of a given number is equivalent to subtracting that number from 2n -1 i.e, from 1111 for 4 bit number., Two‟s Complement (2‟s) Representation Forming the 2s complement of a number is, done by subtracting that number from 2n . So 2s complement of a number is obtained, by adding 1 to 1s complement of that number., Ex: 2‟s complement of 0101 is 1010 +1 = 1011, NB: In all systems, the leftmost bit is 0 for positive number and 1 for negative number., , Floating-point representation, , Floating-point numbers are so called as the decimal or binary point floats over the base, , Computer Organization, , Page 12

Page 18 :

Computer Organization, , [IT II – I ], , depending on the exponent value., It consists two components., • Exponent, • Mantissa, Example: Avogadro's number can be written as 6.02x1023 in base 10. And the mantissa, and exponent are 6.02 and 1023 respctivly. But computer floating-point numbers are, usually based on base two. So 6.02x1023 is approximately (1 and 63/64)x278 or, 1.111111 (base two) x 21001110 (base two), , Error Detection Codes, Parity System, Hamming Distance, CRC, Check sum, , Register Transfer Language And Micro Operations:, Register Transfer language:, Digital systems are composed of modules that are constructed from digital, components, such as registers, decoders, arithmetic elements, and control logic, The modules are interconnected with common data and control paths to form a, digital computer system, The operations executed on data stored in registers are called microoperations, A microoperation is an elementary operation performed on the information, stored in one or more registers, Examples are shift, count, clear, and load, Some of the digital components from before are registers that implement, microoperations, The internal hardware organization of a digital computer is best defined by, specifying, o The set of registers it contains and their functions, o The sequence of microoperations performed on the binary information, stored, o The control that initiates the sequence of microoperations, , , , , , Use symbols, rather than words, to specify the sequence of microoperations, The symbolic notation used is called a register transfer language, A programming language is a procedure for writing symbols to specify a given, computational process, Define symbols for various types of microoperations and describe associated, hardware that can implement the microoperations, , Register Transfer, , , , , Designate computer registers by capital letters to denote its function, The register that holds an address for the memory unit is called MAR, The program counter register is called PC, , Computer Organization, , Page 13

Page 19 :

Computer Organization, , , , , , , , , , , [IT II – I ], , IR is the instruction register and R1 is a processor register, The individual flip-flops in an n-bit register are numbered in sequence from 0 to, n-1, Refer to Figure 4.1 for the different representations of a register, , Designate information transfer from one register to, another by R2 R1, This statement implies that the hardware is available, o The outputs of the source must have a path to the inputs of the, destination, o The destination register has a parallel load capability, If the transfer is to occur only under a predetermined control condition,, designate it by, If (P = 1) then (R2 R1), or,, P: R2 R1,, , Computer Organization, , Page 14

Page 20 :

Computer Organization, where P is a control function that can be either 0 or 1, , , Every statement written in register transfer notation implies the, presence of the required hardware construction, , , , , It is assumed that all transfers occur during a clock edge transition, All microoperations written on a single line are to be executed at the, same time T: R2 R1, R1 R2, , Computer Organization, , Page 15

Page 21 :

Computer Organization, Bus and Memory Transfers, , , , , , , , , , , , , , , , , Rather than connecting wires between all registers, a common bus is, used, A bus structure consists of a set of common lines, one for each bit of a, register, Control signals determine which register is selected by the bus, during each transfer, Multiplexers can be used to construct a common bus, Multiplexers select the source register whose binary information is, then placed on the bus, The select lines are connected to the selection inputs of the, multiplexers and choose the bits of one register, , In general, a bys system will multiplex k registers of n bits each to, produce an n- line common bus, This requires n multiplexers – one for each bit, The size of each multiplexer must be k x 1, The number of select lines required is log k, To transfer information from the bus to a register, the bus lines are, connected to the inputs of all destination registers and the, corresponding load control line must be activated, Rather than listing each step as, BUS C, R1 BUS,, use, R1 C, since the bus isimplied, Instead of using multiplexers, three-state gates can be used to, , Computer Organization, , Page 16

Page 22 :

Computer Organization, , , , , , , , , , , , , , , , , construct the bus system, A three-state gate is a digital circuit that exhibits three states, Two of the states are signals equivalent to logic 1 and 0, The third state is a high-impedance state – this behaves like an open, circuit, which means the output is disconnected and does not have a, logic significance, , The three-state buffer gate has a normal input and a control, input which determines the output state, With control 1, the output equals the normal input, With control 0, the gate goes to a high-impedance state, This enables a large number of three-state gate outputs to be connected, with wires to form a common bus line without endangering loading, effects, , Decoders are used to ensure that no more than one control input is, active at any given time, This circuit can replace the multiplexer in Figure 4.3, To construct a common bus for four registers of n bits each using, three-state buffers, we need n circuits with four buffers in each, Only one decoder is necessary to select between the four registers, Designate a memory word by the letter M, It is necessary to specify the address of M when writing, memory transfer operations, , Computer Organization, , Page 17

Page 23 :

Computer Organization, , , , , Designate the address register by AR and the data register by DR, The read operation can be stated as: Read: DR M[AR], The write operation can, be stated as:, Write: M[AR] , R1, , Arithmetic Microoperations, , , There are four categories of the most common microoperations:, o Register transfer: transfer binary information from one register, to another, o Arithmetic: perform arithmetic operations on numeric, data stored in registers, o Logic: perform bit manipulation operations on non-numeric, data stored in registers, o Shift: perform shift operations on data stored in registers, , , , The basic arithmetic microoperations are addition, subtraction,, increment, decrement, and shift, Example of addition: R3 R1 +R2, Subtraction is most often implemented through complementation and, addition, Example of subtraction: R3 R1 +R2 + 1 (strikethrough denotes, bar on top – 1‟s complement of R2), Adding 1 to the 1‟s complement produces the 2‟s complement, Adding the contents of R1 to the 2‟s complement of R2 is, equivalent to subtracting, , , , , , , , Computer Organization, , Page 18

Page 24 :

Computer Organization, , , , , , Multiply and divide are not included as microoperations, A microoperation is one that can be executed by one clock pulse, Multiply (divide) is implemented by a sequence of add and shift, microoperations (subtract and shift), , , , To implement the add microoperation with hardware, we need the, registers that hold the data and the digital component that performs, the addition, A full-adder adds two bits and a previous carry, , , , , , , , , A binary adder is a digital circuit that generates the arithmetic sum of, two binary numbers of any length, A binary added is constructed with full-adder circuits connected in, cascade, An n-bit binary adder requires n full-adders, , The subtraction A-B can be carried out by the following steps, o Take the 1‟s complement of B (invert each bit), , Computer Organization, , Page 19

Page 25 :

Computer Organization, , , , , , , , o Get the 2‟s complement by adding 1, o Add the result to A, The addition and subtraction operations can be combined into one, common circuit by including an XOR gate with each full-adder, , The increment microoperation adds one to a number in a register, This can be implemented by using a binary counter – every time the, count enable is active, the count is incremented by one, If the increment is to be performed independent of a particular, register, then use half-adders connected in cascade, , , , An n-bit binary incrementer requires n half-adders, , , , Each of the arithmetic microoperations can be implemented in one, composite arithmetic circuit, The basic component is the parallel adder, Multiplexers are used to choose between the different operations, The output of the binary adder is calculated from the, following sum: D = A + Y + Cin, , , , , , Computer Organization, , Page 20

Page 26 :

Computer Organization, , Logic Microoperations, , , , , Logic operations specify binary operations for strings of bits stored, in registers and treat each bit separately, Example: the XOR of R1 and R2 is symbolized by, P: R1 R1⊕ R2, Example: R1 = 1010 and R2 = 1100, 1010 Content of R1, 1100 Content of R2, , Computer Organization, , Page 21

Page 27 :

Computer Organization, 0110, , , Content of R1 after P = 1, , Symbols used for logical microoperations:, o OR: , o AND: , o XOR: ⊕, , , , , , , , , , The + sign has two different meanings: logical OR and summation, When + is in a microoperation, then summation, When + is in a control function, then OR, Example:, P + Q: R1 R2 + R3, R4 R5 R6, There are 16 different logic operations that can be performed with, two binary variables, , The hardware implementation of logic microoperations requires that, logic gates be inserted for each bit or pair of bits in the registers, , Computer Organization, , Page 22

Page 28 :

Computer Organization, , , All 16 microoperations can be derived from using four logic gates, , , , Logic microoperations can be used to change bit values, delete a, group of bits, or insert new bit values into a register, The selective-set operation sets to 1 the bits in A where there are, corresponding 1‟s in B, 1010 A before, 1100 B, (logic, operand), 1110 A, after, , , , AAB, , , The selective-complement operation complements bits in A, where there are corresponding 1‟s in B, 1010 A before, 1100 B, (logic, operand), 0110 A, after, A A⊕ B, , , , The selective-clear operation clears to 0 the bits in A only, where there are corresponding 1‟s in B, 1010 A before, 1100 B, (logic, , Computer Organization, , Page 23

Page 29 :

Computer Organization, operand), 0010 A, after, A A B, , , , , , The mask operation is similar to the selective-clear operation, except, that the bits of A are cleared only where there are corresponding 0‟s, in B, 1010 A before, 1100 B, (logic, operand), 1000 A, after, AAB, The insert operation inserts a new value into a group of bits, This is done by first masking the bits to be replaced and then Oring, them with the bits to be inserted, 0110 1010, A before, 0000 1111, B (mask), 0000 1010, A after masking, 0000 1010, 1001 0000, 1001 1010, , , , A before, B (insert), A after insertion, , The clear operation compares the bits in A and B and produces an all, 0‟s result if the two number are equal, 1010 A, 1010 B, 0000, , A A⊕ B, , Shift Microoperations, , , , , , , , , Shift microoperations are used for serial transfer of data, They are also used in conjunction with arithmetic, logic, and, other data- processing operations, There are three types of shifts: logical, circular, and arithmetic, A logical shift is one that transfers 0 through the serial input, The symbols shl and shr are for logical shift-left and shift-right by, one position R1 shlR1, The circular shift (aka rotate) circulates the bits of the register, around the two ends without loss of information, The symbols cil and cir are for circular shift left and right, , Computer Organization, , Page 24

Page 30 :

Computer Organization, , , , , , , , , The arithmetic shift shifts a signed binary number to the left or right, To the left is multiplying by 2, to the right is dividing by 2, Arithmetic shifts must leave the sign bit unchanged, A sign reversal occurs if the bit in Rn-1 changes in value after the shift, This happens if the multiplication causes an overflow, An overflow flip-flop Vs can be used to detect, the overflow Vs = Rn-1 ⊕ Rn-2, , , , , , , , A bi-directional shift unit with parallel load could be used to implement, this, Two clock pulses are necessary with this configuration: one to load, the value and another to shift, In a processor unit with many registers it is more efficient to, implement the shift operation with a combinational circuit, The content of a register to be shifted is first placed onto a common, bus and the output is connected to the combinational shifter, the shifted, number is then loaded back into the register, This can be constructed with multiplexers, , Computer Organization, , Page 25

Page 31 :

Computer Organization, , Arithmetic Logic Shift Unit, , , , , , The arithmetic logic unit (ALU) is a common operational unit, connected to a number of storage registers, To perform a microoperation, the contents of specified registers are, placed in the inputs of the ALU, The ALU performs an operation and the result is then transferred to a, destination register, The ALU is a combinational circuit so that the entire register transfer, operation from the source registers through the ALU and into the, destination register can be performed during one clock pulse period, , Computer Organization, , Page 26

Page 32 :

Computer Organization, , Computer Organization, , Page 27

Page 33 :

Computer Organization, , Computer Organization, , Page 28

Page 34 :

Computer Organization, UNIT II, Basic Computer Organization and Design, , Instruction codes. Computer Registers Computer instructions, Timing and, Control, Instruction cycle. Memory Reference Instructions, Input – Output and, Interrupt, Complete Computer Description., Micro Programmed Control: Control memory, Address sequencing, micro, program example, design of control unit, micro Programmed control, , ----------------------------------------------------------------------------------------------------------Instruction Formats:, A computer will usually have a variety of instruction code formats. It, is the function of the control unit within the CPU to interpret each, instruction code and provide the necessary control functions needed to, process the instruction., The format of an instruction is usually depicted in a rectangular box, symbolizing the bits of the instruction as they appear in memory words or in, a control register. The bits of the instruction are divided into groups called, fields. The most common fields found in instruction formats are:, 1 An operation code field that specifies the operation to be, performed., 2. An address field that designates a memory address or a processor, registers., 3. A mode field that specifies the way the operand or the effective, address is determined., , Other special fields are sometimes employed under certain, circumstances, as for example a field that gives the number of shifts in a, shift-type instruction., The operation code field of an instruction is a group of bits that define, various processor operations, such as add, subtract, complement, and shift., The bits that define the mode field of an instruction code specify a variety, of alternatives for choosing the operands from the given address., Operations specified by computer instructions are executed on some, data stored in memory or processor registers, Operands residing in, processor registers are specified with a register address. A register address, is a binary number of k bits that defines one of 2k registers in the CPU. Thus, a CPU with 16 processor registers R0 through R15 will have a register, address field of four bits. The binary number 0101, for example, will, Computer Organization, , Page 29

Page 35 :

Computer Organization, designate register R5., Computers may have instructions of several different lengths, containing varying number of addresses. The number of address fields in, the instruction format of a computer depends on the internal organization of, its registers. Most computers fall into one of three types of CPU, organizations:, 1, , Single accumulator organization., , 2, , General register organization., , 3, , Stack organization., , All operations are performed with an implied accumulator register., The instruction format in this type of computer uses one address field. For, example, the instruction that specifies an arithmetic addition is defined by, an assembly language instruction as, , ADD., , Where X is the address of the operand. The ADD instruction in this, case results in the operation AC ← AC + M[X]. AC is the accumulator, register and M[X] symbolizes the memory word located at address X., An example of a general register type of organization was presented, in Fig. 7.1. The instruction format in this type of computer needs three, register address fields. Thus the instruction for an arithmetic addition may, be written in an assembly language as, ADD, , R1, R2, R3, , To denote the operation R1 ← R2 + R3. The number of address fields, in the instruction can be reduced from three to two if the destination register, is the same as one of the source registers. Thus the instruction, ADD, , R1, R2, , Would denote the operation R1 ← R1 + R2. Only register addresses, for R1 and R2 need be specified in this instruction., Computers with multiple processor registers use the move instruction, with a mnemonic MOV to symbolize a transfer instruction. Thus the, instruction, MOV, , R1, R2, , Denotes the transfer R1 ← R2 (or R2 ← R1, depending on the, particular computer). Thus transfer-type instructions need two address fields, Computer Organization, , Page 30

Page 36 :

Computer Organization, to specify the source and the destination., General register-type computers employ two or three address fields in, their instruction format. Each address field may specify a processor register, or a memory word. An instruction symbolized by, ADD, , R1, X, , Would specify the operation R1 ← R + M [X]. It has two address, fields, one for register R1 and the other for the memory address X., The stack-organized CPU was presented in Fig. 8-4. Computers with, stack organization would have PUSH and POP instructions which require, an address field. Thus the instruction, PUSH, , X, , Will push the word at address X to the top of the stack. The stack, pointer is updated automatically. Operation-type instructions do not need an, address field in stack-organized computers. This is because the operation is, performed on the two items that are on top of the stack. The instruction, ADD in a stack computer consists of an operation code only with no address, field. This operation has the effect of popping the two top numbers from the, stack, adding the numbers, and pushing the sum into the stack. There is no, need to specify operands with an address field since all operands are, implied to be in the stack., To illustrate the influence of the number of addresses on computer, programs, we will evaluate the arithmetic statement X = (A + B) ∗ (C + D)., Using zero, one, two, or three address instruction. We will use the, symbols ADD, SUB, MUL, and DIV for the four arithmetic operations;, MOV for the transfer-type operation; and LOAD and STORE for transfers, to and from memory and AC register. We will assume that the operands are, in memory addresses A, B, C, and D, and the result must be stored in, memory at address X., Three-Address Instructions, Computers with three-address instruction formats can use each, address field to specify either a processor register or a memory operand., The program in assembly language that evaluates X = (A + B) ∗ (C + D) is, shown below, together with comments that explain the register transfer, Computer Organization, , Page 31

Page 37 :

Computer Organization, operation of each instruction., ADD, , R1, A, B, , R1, , ←, , R2, , ←, , M [A] + M [B], ADD, , R2, C, D, , M [C] + M [D], MUL X, R1, R2, , M [X], , ← R1 ∗ R2, It is assumed that the computer has two processor registers, R1 and R2. The, symbol M [A] denotes the operand at memory address symbolized by A., The advantage of the three-address format is that it results in short programs, when evaluating arithmetic expressions. The disadvantage is that the binarycoded instructions require too many bits to specify three addresses. An, example of a commercial computer that uses three-address instructions is, the Cyber 170. The instruction formats in the Cyber computer are restricted, to either three register address fields or two register address fields and one, memory address field., , Two-Address Instructions, Two address instructions are the most common in commercial computers., Here again each address field can specify either a processor register or a, memory word. The program to evaluate X = (A + B) ∗ (C + D) is as, follows:, MOV R1, A, , R1 ← M [A], , ADD, , R1 ← R1 + M [B], , R1, B, , MOV R2, C, , R2 ← M [C], , ADD, , R2, D, , R2 ← R2 + M [D], , MUL, , R1, R2, , R1 ← R1∗R2, , MOV X, R1, , M [X] ← R1, , The MOV instruction moves or transfers the operands to and from, memory and processor registers. The first symbol listed in an instruction is, assumed to be both a source and the destination where the result of the, operation is transferred., Computer Organization, , Page 32

Page 38 :

Computer Organization, One-Address Instructions, One-address instructions use an implied accumulator (AC) register for, all data manipulation. For multiplication and division there is a need for a, second register. However, here we will neglect the second and assume that, the AC contains the result of tall operations. The program to evaluate X =, (A + B) ∗ (C + D) is, LOAD, , A, , AC ← M [A], , ADD, , B, , AC ← A [C] + M [B], , LOAD, , C, , AC ← M [C], , ADD, , D, , AC ← AC + M [D], , MUL, , T, , AC ← AC ∗ M [T], , STORE, , X, , M [X] ← AC, , STORE, , T, , M [T] ← AC, , All operations are done between the AC register and a memory, operand. T is the address of a temporary memory location required for, storing the intermediate result., Zero-Address Instructions, A stack-organized computer does not use an address field for the, instructions ADD and MUL. The PUSH and POP instructions, however,, need an address field to specify the operand that communicates with the, stack. The following program shows how X = (A + B) ∗ (C + D) will be, written for a stack organized computer. (TOS stands for top of stack), PUSH, , A, , TOS ← A, , PUSH, , B, , TOS ← B, , ADD, , TOS ← (A + B), , PUSH, , C, , TOS ← C, , PUSH, , D, , TOS ← D, , ADD, , TOS ← (C + D), , MUL, , TOS ← (C + D) ∗ (A + B), , POP, , X, , M [X] ← TOS, , To evaluate arithmetic expressions in a stack computer, it is necessary, to convert the expression into reverse Polish notation. The name “zeroaddress” is given to this type of computer because of the absence of an, Computer Organization, , Page 33

Page 39 :

Computer Organization, address field in the computational instructions., , Instruction Codes, A set of instructions that specify the operations, operands, and the sequence by, which processing has to occur. An instruction code is a group of bits that tells the, computer to perform a specific operation part., , Format of Instruction, The format of an instruction is depicted in a rectangular box symbolizing the bits, of an instruction. Basic fields of an instruction format are given below:, 1., , An operation code field that specifies the operation to be performed., , 2., , An address field that designates the memory address or register., , 3., , A mode field that specifies the way the operand of effective address is, determined., , Computers may have instructions of different lengths containing varying number, of addresses. The number of address field in the instruction format depends upon, the internal organization of its registers., , Addressing Modes, , To understand the various addressing modes to be presented in this section,, , it is imperative that we understand the basic operation cycle of the computer., The control unit of a computer is designed to go through an instruction cycle, that is divided into three major phases:, 1., , Fetch the instruction from memory, , 2., , Decode the instruction., , 3., , Execute the instruction., , There is one register in the computer called the program counter of PC that, keeps track of the instructions in the program stored in memory. PC holds the, address of the instruction to be executed next and is incremented each time an, instruction is fetched from memory. The decoding done in step 2 determines, the operation to be performed, the addressing mode of the instruction and the, location of the operands. The computer then executes the instruction and, returns to step 1 to fetch the next instruction in sequence., In some computers the addressing mode of the instruction is specified, with a distinct binary code, just like the operation code is specified. Other, computers use a single binary code that designates both the operation and, the mode of the instruction. Instructions may be defined with a variety of, Computer Organization, , Page 34

Page 40 :

Computer Organization, addressing modes, and sometimes, two or more addressing modes are, combined in one instruction., 1. The operation code specified the operation to be performed. The, mode field is sued to locate the operands needed for the operation. There, may or may not be an address field in the instruction. If there is an address, field, it may designate a memory address or a processor register. Moreover,, as discussed in the preceding section, the instruction may have more than, one address field, and each address field may be associated with its own, particular addressing mode., Although most addressing modes modify the address field of the, instruction, there are two modes that need no address field at all. These are, the implied and immediate modes., 1 Implied Mode: In this mode the operands are specified implicitly in the, definition of the instruction. For example, the instruction “complement accumulator” is, an implied-mode instruction because the operand in the accumulator register is implied, in the definition of the instruction. In fact, all register reference instructions that sue an, accumulator are implied-mode instructions., Op code, , Mode, , Address, , Figure 1: Instruction format with mode field, , Zero-address instructions in a stack-organized computer are impliedmode instructions since the operands are implied to be on top of the stack., 2 Immediate Mode: In this mode the operand is specified in the instruction, itself. Inother words, an immediate- mode instruction has an operand field rather than, an address field. The operand field contains the actual operand to be used in, conjunction with the operation specified in the instruction. Immediate-mode, instructions are useful for initializing registers to a constant value., , It was mentioned previously that the address field of an instruction, may specify either a memory word or a processor register. When the, address field specifies a processor register, the instruction is said to be in the, register mode., 3 Register Mode: In this mode the operands are in registers that reside within, the CPU.The particular register is selected from a register field in the instruction. A kbit field can specify any one of 2k registers., 4 Register Indirect Mode: In this mode the instruction specifies a register in the, CPUwhose contents give the address of the operand in memory. In other words, the, , Computer Organization, , Page 35

Page 41 :

Computer Organization, selected register contains the address of the operand rather than the operand itself., , Before using a register indirect mode instruction, the programmer must, ensure that the memory address fo the operand is placed in the processor, register with a previous instruction. A reference to the register is then, equivalent to specifying a memory address. The advantage of a register, indirect mode instruction is that the address field of the instruction sues, fewer bits to select a register than would have been required to specify a, memory address directly., 5 Auto increment or Auto decrement Mode: This is similar to the register indirect, modeexcept that the register is incremented or decremented after (or before) its value is, used to access memory. When the address stored in the register refers to a table of data, in memory, it is necessary to increment or decrement the register after every access to, the table. This can be achieved by using the increment or decrement instruction., However, because it is such a common requirement, some computers incorporate a, special mode that automatically increments or decrements the content of the register, after data access., , The address field of an instruction is used by the control unit in the, CPU to obtain the operand from memory. Sometimes the value given in the, address field is the address of the operand, but sometimes it is just an, address from which the address of the operand is calculated. To differentiate, among the various addressing modes it is necessary to distinguish between, the address part of the instruction and the effective address used by the, control when executing the instruction. The effective address is defined to, be the memory address obtained from the computation dictated by the given, addressing mode. The effective address is the address of the operand in a, computational-type instruction. It is the address where control branches in, response to a branch-type instruction. We have already defined two, addressing modes in previous chapter., 6 Direct Address Mode: In this mode the effective address is equal to the address part, ofthe instruction. The operand resides in memory and its address is given directly by, the address field of the instruction. In a branch-type instruction the address field, specifies the actual branch address., 7 Indirect Address Mode: In this mode the address field of the instruction gives, theaddress where the effective address is stored in memory. Control fetches the, instruction from memory and uses its address part to access memory again to read the, , Computer Organization, , Page 36

Page 42 :

Computer Organization, effective address., 8 Relative Address Mode: In this mode the content of the program counter is added to, theaddress part of the instruction in order to obtain the effective address. The address, part of the instruction is usually a signed number (in 2‟s complement representation), which can be either positive or negative. When this number is added to the content of, the program counter, the result produces an effective address whose position in, memory is relative to the address of the next instruction. To clarify with an example,, assume that the program counter contains the number 825 and the address part of the, instruction contains the number 24. The instruction at location 825 is read from, memory during the fetch phase and the program counter is then incremented by one, to 826 + 24 = 850. This is 24 memory locations forward from the address of the next, instruction. Relative addressing is often used with branch-type instructions when the, branch address is in the area surrounding the instruction word itself. It results in a, shorter address field in the instruction format since the relative address can be, specified with a smaller number of bits compared to the number of bits required to, designate the entire memory address., 9 Indexed Addressing Mode: In this mode the content of an index register is added, to theaddress part of the instruction to obtain the effective address. The index, register is a special CPU register that contains an index value. The address field of, the instruction defines the beginning address of a data array in memory. Each, operand in the array is stored in memory relative to the beginning address. The, distance between the beginning address and the address of the operand is the index, value stores in the index register. Any operand in the array can be accessed with, the same instruction provided that the index register contains the correct index, value. The index register can be incremented to facilitate access to consecutive, operands. Note that if an index-type instruction does not include an address field in, its format, the instructionconverts to the register indirect mode of operation. Some, computers dedicate one CPU register to function solely as an index register. This, register is involved implicitly when the index-mode instruction is used. In, computers with many processor registers, any one of the CPU registers can contain, the index number. In such a case the register must be specified explicitly in a, register field within the instruction format., 10, , Base Register Addressing Mode: In this mode the content of a base register is, , added tothe address part of the instruction to obtain the effective address. This is, similar to the indexed addressing mode except that the register is now called a base, register instead of an index register. The difference between the two modes is in, the way they are used rather than in the way that they are computed. An index, , Computer Organization, , Page 37

Page 43 :

Computer Organization, register is assumed to hold an index number that is relative to the address part of, the instruction. A base register is assumed to hold a base address and the address, field of the instruction gives a displacement relative to this base address. The base, register addressing mode is used in computers to facilitate the relocation of, programs in memory. When programs and data are moved from one segment of, memory to another, as required in multiprogramming systems, the address values, of the base register requires updating to reflect the beginning of a new memory, segment., , Numerical Example, , Computer Organization, , Page 38

Page 44 :

Computer Organization, Computer Registers, Data Register(DR) : hold the operand(Data) read from memory, Accumulator Register(AC) : general purpose processing register, Instruction Register(IR) : hold the instruction read from memory, Temporary Register(TR) : hold a temporary data during processing, Address Register(AR) : hold a memory address, 12 bit width, Program Counter(PC) :, , »hold the address of the next instruction to be read from memory, after the current instruction is executed, »Instruction words are read and executed in sequence unless a branch, instruction is encountered, »A branch instruction calls for a transfer to a nonconsecutive, instruction in the program, »The address part of a branch instruction is transferred to PC to become, the address of the next instruction, Input Register(INPR) : receive an 8-bit character from an input device, Output Register(OUTR) : hold an 8-bit character for an, output device, The following registers are used in Mano‟s example computer., Register, , Number, , Register, , Register, , symbol, , of bits, , name, , Function-----------------------, , DR, , 16, , Data register, , Holds memory operands, , AR, , 12, , Address register, , Holds address for memory, , AC, , 16, , Accumulator, , Processor register, , IR, , 16, , Instruction register, , Holds instruction code, , PC, , 12, , Program counter, , Holds address of instruction, , TR, , 16, , Temporary register, , Holds temporary data, , INPR, , 8, , Input register, , Holds input character, , OUTR, , 8, , Output register, , Holds output character, , Computer Organization, , Page 39

Page 45 :

Computer Organization, Computer Instructions:, The basic computer has 16 bit instruction register (IR) which can denote either, memory reference or register reference or input-output instruction., 1. Memory Reference – These instructions refer to memory address as an, operand. The other operand is always accumulator. Specifies 12 bit, address, 3 bit opcode (other than 111) and 1 bit addressing mode for direct, and indirect addressing., Example, –, IR register contains = 0001XXXXXXXXXXXX, i.e. ADD after fetching, and decoding of instruction we find out that it is a memory reference, instruction for ADD operation., Hence, DR <- M[AR], AC <- AC+ DR, SC <- 0, 2., , Register Reference – These instructions perform operations on registers, rather than memory addresses. The IR(14-12) is 111 (differentiates it from, memory reference) and IR(15) is 0 (differentiates it from input/output, instructions). The rest 12 bits specify register operation., Example, –, IR register contains = 0111001000000000, i.e. CMA after fetch and decode, cycle we find out that it is a register reference instruction for complement, accumulator., Hence, AC <- ~AC, , 3., , Input/Output – These instructions are for communication between, computer and outside environment. The IR(14-12) is 111 (differentiates it, from memory reference) and IR(15) is 1 (differentiates it from register, reference instructions). The rest 12 bits specify I/O operation., Example, –, IR register contains = 1111100000000000, i.e. INP after fetch and decode, cycle we find out that it is an input/output instruction for inputing, character. Hence, INPUT character from peripheral device., , Timing and Control, All sequential circuits in the Basic Computer CPU are driven by a master clock,, with the exception of the INPR register. At each clock pulse, the control unit, sends control signals to control inputs of the bus, the registers, and the ALU., Control unit design and implementation can be done by two general methods:, A hardwired control unit is designed from scratch using traditional digital, logic design techniques to produce a minimal, optimized circuit. In other, words, the control unit is like an ASIC (application-specific integrated, circuit)., A microprogrammed control unit is built from some sort of ROM. The, desired control signals are simply stored in the ROM, and retrieved in, sequence to drive the microoperations needed by a particular instruction., , Computer Organization, , Page 40

Page 46 :

Computer Organization, Instruction Cycle, The CPU performs a sequence of microoperations for each instruction. The, sequence for each instruction of the Basic Computer can be refined into 4, abstract phases:, 1., 2., 3., 4., , Fetch instruction, Decode, Fetch operand, Execute, , Program execution can be represented as a top-down design:, 1. Program execution, a. Instruction 1, i., Fetch instruction, ii. Decode, iii. Fetch operand, iv., Execute, b. Instruction 2, i., Fetch instruction, ii. Decode, iii. Fetch operand, iv., Execute, c. Instruction 3 ..., Program execution begins with:, PC ← address of first instruction, SC ← 0, After this, the SC is incremented at each clock cycle until an instruction is, completed, and then it is cleared to begin the next instruction. This process, repeats until a HLT instruction is executed, or until the power is shut off., Instruction Fetch and Decode, The instruction fetch and decode phases are the same for all instructions, so the, control functions and microoperations will be independent of the instruction, code., Everything that happens in this phase is driven entirely by timing variables T0,, T1 and T2. Hence, all control inputs in the CPU during fetch and decode are, functions of these three variables alone., T0: AR ← PC, T1: IR ← M[AR], PC ← PC + 1, T2: D0-7 ← decoded IR(12-14), AR ← IR(0-11), I ← IR(15), For every timing cycle, we assume SC ← SC + 1 unless it is stated that SC ← 0., Computer Organization, , Page 41

Page 47 :

Computer Organization, Micro Programmed Control:, Control Memory, , , , , , , , , , , , , , , , , , , , , , , , The control unit in a digital computer initiates sequences of microoperations, The complexity of the digital system is derived form the number of, sequences that are performed, When the control signals are generated by hardware, it is hardwired, In a bus-oriented system, the control signals that specify, microoperations are groups of bits that select the paths in, multiplexers, decoders, and ALUs., The control unit initiates a series of sequential steps of microoperations, The control variables can be represented by a string of 1‟s and 0‟s called a, control word, A microprogrammed control unit is a control unit whose binary control, variables are stored in memory, A sequence of microinstructions constitutes a microprogram, The control memory can be a read-only memory, Dynamic microprogramming permits a microprogram to be loaded, and uses a writable control memory, A computer with a microprogrammed control unit will have two, separate memories: a main memory and a control memory, The microprogram consists of microinstructions that specify various, internal control signals for execution of register microoperations, These microinstructions generate the microoperations to:, o, fetch the instruction from main memory, o, evaluate the effective address, o, execute the operation, o, return control to the fetch phase for the next instruction, The control memory address register specifies the address of the, microinstruction, The control data register holds the microinstruction read from memory, The microinstruction contains a control word that specifies one, or more microoperations for the data processor, The location for the next microinstruction may, or may not be the, next in sequence, Some bits of the present microinstruction control the generation of the, address of the next microinstruction, The next address may also be a function of external input conditions, While the microoperations are being executed, the next address is, computed in the next address generator circuit (sequencer) and then, transferred into the CAR to read the next microinstructions, Typical functions of a sequencer are:, , o, incrementing the CAR by one, o, loading into the CAR and address from control memory, o, transferring an external address, o, loading an initial address to start the control operations, A clock is applied to the CAR and the control word and next-address, Computer Organization, , Page 42

Page 48 :

Computer Organization, , , , , , information are taken directly from the control memory, The address value is the input for the ROM and the control work is the, output, No read signal is required for the ROM as in a RAM, The main advantage of the microprogrammed control is that once the, hardware configuration is established, there should be no need for, h/w or wiring changes, To establish a different control sequence, specify a, different set of microinstructions for control memory, , Address Sequencing, , , , , , , , , , , , , Microinstructions are stored in control memory in groups, with each group, specifying a routine, Each computer instruction has its own microprogram routine to, generate the microoperations, The hardware that controls the address sequencing of the control memory, must be capable of sequencing the microinstructions within a routine and, be able to branch from one routine to another, Steps the control must undergo during the execution of a single, computer instruction:, o Load an initial address into the CAR when power is turned on in the, computer. This address is usually the address of the first, microinstruction that activates the instruction fetch routine – IR, holds instruction, o The control memory then goes through the routine to, determine the effective address of the operand – AR holds, operand address, o The next step is to generate the microoperations that, execute the instruction by considering the opcode and, applying a mapping, o, After execution, control must return to the fetch routine by, executing an unconditional branch, The microinstruction in control memory contains a set of bits to, initiate microoperations in computer registers and other bits to, specify the method by which the next address is obtained, Conditional branching is obtained by using part of the microinstruction to, select a specific status bit in order to determine its condition, The status conditions are special bits in the system that provide parameter, information such as the carry-out of an adder, the sign bit of a, number, the mode bits of an instruction, and i/o status conditions, The status bits, together with the field in the microinstruction that, specifies a branch address, control the branch logic, The branch logic tests the condition, if met then branches, otherwise,, increments the CAR, If there are 8 status bit conditions, then 3 bits in the microinstruction are, used to specify the condition and provide the selection variables for, the multiplexer, , Computer Organization, , Page 43

Page 49 :

Computer Organization, , , , , , , , , , , , , For unconditional branching, fix the value of one status bit to be one, load the branch address from control memory into the CAR, A special type of branch exists when a microinstruction specifies a, branch to the first word in control memory where a microprogram, routine is located, The status bits for this type of branch are the bits in the opcode, Assume an opcode of four bits and a control memory of 128 locations, The mapping process converts the 4-bit opcode to a 7-bit address for, control memory, This provides for each computer instruction a microprogram routine, with a capacity of four microinstructions, Subroutines are programs that are used by other routines to, accomplish a particular task and can be called from any point, within the main body of the microprogram, Frequently many microprograms contain identical section of code, Microinstructions can be saved by employing subroutines that use, common sections of microcode, Microprograms that use subroutines must have a provisions for storing, the return address during a subroutine call and restoring the address, during a subroutine return, A subroutine register is used as the source and destination for the addresses, , Computer Organization, , Page 44

Page 50 :

Computer Organization, UNIT III, Computer Processing Unit Organization, Introduction to CPU, The operation or task that must perform by CPU is:, • Fetch Instruction: The CPU reads an instruction from memory., • Interpret Instruction: The instruction is decoded to determine what action is, required., • Fetch Data: The execution of an instruction may require reading data from memory, or I/O module., • Process data: The execution of an instruction may require performing some arithmetic, or logical operation on data., • Write data: The result of an execution may require writing data to memory or an I/O, module., To do these tasks, it should be clear that the CPU needs to store some data temporarily., It must remember the location of the last instruction so that it can know where to get the, next instruction. It needs to store instructions and data temporarily while an instruction, is being executed. In other words, the CPU needs a small internal memory. These, storage locations are generally referred as registers., The major components of the CPU are an arithmetic and logic unit (ALU) and a control, unit (CU). The ALU does the actual computation or processing of data. The CU controls, the movement of data and instruction into and out of the CPU and controls the operation, of the ALU., The CPU is connected to the rest of the system through system bus. Through system, bus, data or information gets transferred between the CPU and the other component of, the system. The system bus may have three components:, Data Bus: Data bus is used to transfer the data between main memory and CPU., Address Bus: Address bus is used to access a particular memory location by putting the, address of the memory location., Control Bus: Control bus is used to provide the different control signal generated by, CPU to different part of the system., As for example, memory read is a signal generated by CPU to indicate that a memory, read operation has to be performed. Through control bus this signal is transferred to, memory module to indicate the required operation., , Figure 1: CPU with the system bus., There are three basic components of CPU: register bank, ALU and Control Unit. There, , Computer Organization, , Page 45

Page 51 :

Computer Organization, are several data movements between these units and for that an internal CPU bus is, used. Internal CPU bus is needed to transfer data between the various registers and the, ALU., , Figure 2 : Internal Structure of CPU, Stack Organization:, A useful feature that is included in the CPU of most computers is a stack or last in, first, out (LIFO) list. A stack is a storage device that stores information in such a manner that, the item stored last is the first item retrieved. The operation of a stack can be compared, to a stack of trays. The last tray placed on top of the stack is the first to be taken off., The stack in digital computers is essentially a memory unit with an address register that, can only( after an initial value is loaded in to it).The register that hold the address for the, stack is called a stack pointer (SP) because its value always points at the top item in, stack. Contrary to a stack of trays where the tray it self may be taken out or inserted, the, physical registers of a stack are always available for reading or writing., The two operation of stack are the insertion and deletion of items. The operation of, insertion is called PUSH because it can be thought of as the result of pushing a new item, on top. The operation of deletion is called POP because it can be thought of as the result, of removing one item so that the stack pops up. However, nothing is pushed or popped, in a computer stack. These operations are simulated by incrementing or decrementing, the stack pointer register., , Computer Organization, , Page 46

Page 52 :

Computer Organization, Register stack:, A stack can be placed in a portion of a large memory or it can be organized as a, collection of a finite number of memory words or registers. Figure X shows the, organization of a 64-word register stack. The stack pointer register SP contains a binary, number whose value is equal to the address of the word that is currently on top of the, stack. Three items are placed in the stack: A, B, and C in the order. item C is on the top, of the stack so that the content of sp is now 3. To remove the top item, the stack is, popped by reading the memory word at address 3 and decrementing the content of SP., Item B is now on top of the stack since SP holds address 2. To insert a new item, the, stack is pushed by incrementing SP and writing a word in the next higher location in the, stack. Note that item C has read out but not physically removed. This does not matter, because when the stack is pushed, a new item is written in its place., In a 64-word stack, the stack pointer contains 6 bits because 26 =64. since SP has only, six bits, it cannot exceed a number grater than 63(111111 in binary). When 63 is, incremented by 1, the result is 0 since 111111 + 1 =1000000 in binary, but SP can, accommodate only the six least significant bits. Similarly, when 000000 is decremented, by 1, the result is 111111. The one bit register Full is set to 1 when the stack is full, and, the one-bit register EMTY is set to 1 when the stack is empty of items. DR is the data, register that holds the binary data to be written in to or read out of the stack., , Figure 3: Block Diagram Of A 64-Word Stack, Initially, SP is cleared to 0, Emty is set to 1, and Full is cleared to 0, so that SP points to, the word at address o and the stack is marked empty and not full. if the stack is not full ,, a new item is inserted with a push operation. the push operation is implemented with the, following sequence of micro-operation., SP ←SP + 1, M(SP) ← DR, if (sp=0) then (Full ← 1), Emty ← 0, , (Increment stack pointer), (Write item on top of the stack), (Check if stack is full), ( Marked the stack not empty), , Computer Organization, , Page 47

Page 53 :